bw2data.weighting_normalization#

Module Contents#

Classes#

LCIA normalization data - used to transform meaningful units, like mass or damage, into "person-equivalents" or some such thing. |

|

LCIA weighting data - used to combine or compare different impact categories. |

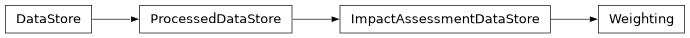

- class bw2data.weighting_normalization.Normalization(name)[source]#

Bases:

bw2data.ia_data_store.ImpactAssessmentDataStore

LCIA normalization data - used to transform meaningful units, like mass or damage, into “person-equivalents” or some such thing.

The data schema for IA normalization is:

Schema([ [valid_tuple, maybe_uncertainty] ])

- where:

valid_tupleis a dataset identifier, like("biosphere", "CO2")maybe_uncertaintyis either a number or an uncertainty dictionary

- class bw2data.weighting_normalization.Weighting(name)[source]#

Bases:

bw2data.ia_data_store.ImpactAssessmentDataStore

LCIA weighting data - used to combine or compare different impact categories.

The data schema for weighting is a one-element list:

Schema(All( [uncertainty_dict], Length(min=1, max=1) ))